From Virtualization to Containerization: The Evolution of Cloud Computing

There would be no cloud computing without virtualization. In a sense, cloud computing is virtualization carried out on a massive scale. And cloud computing, as every CIO knows, has become a key component of an enterprise’s IT architecture. So, what then is virtualization, which seems to be a cloud’s foundational element?

Virtualization

Creating the illusion of being able to use a shared resource as if one had exclusive use of it has been with us since the early 1960s. With the introduction of time-sharing computers, although confined in those days to very limited professional circles, each user was granted exclusive use of an expensive mainframe’s computing power for a “slice” of time.

These days, when virtualization has become mainstream, any physical resource can be “sliced” – be it the server hardware, storage, networking, desktops, and so forth – so that multiple users can share that resource in a carefully partitioned way. In this post, we’ll concentrate on virtualization of the first type of resource – server hardware.

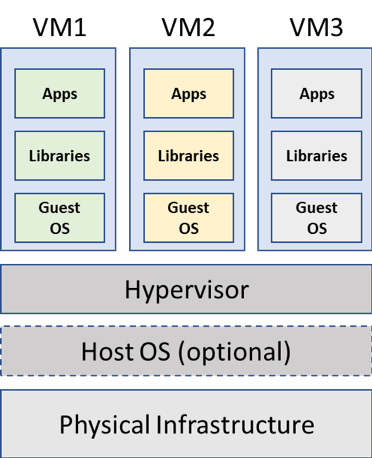

Virtualization works by running a type of software application called a hypervisor on top of a physical server to emulate the underlying hardware (RAM, CPU, I/O, etc.) so that these resources can be used by multiple, fully isolated virtual machines (VM) running on top of the hypervisor.

Each VM “thinks” it has exclusive use of the underlying resources, which the hypervisor ensures by carefully directing requests and responses back and forth between a VM and the hardware to maintain the single-machine illusion.

In the figure at right, each VM runs it own software stack complete with its own operating system (OS) of choice and companion software – such as libraries – needed by its applications.

If the physical infrastructure is a personal computer, the hypervisor allows that laptop/desktop to run multiple OSs, each in its own VM. Personal computers need a host OS with the hypervisor as an application on top of that. This is how a host Linux machine can run Windows as a guest in its own VM for certain applications such as Microsoft Office. When the physical resource is a server, as in an enterprise data center, the server can be virtualized by running each enterprise application in its own VM silo. Enterprise servers typically run the hypervisor on top of the “bare metal”, without a host OS.

Many enterprise applications are dependent on particular OSs or a purely proprietary software stack which, without virtualization, would have required separate servers with those OSs and software. And, depending on the application’s traffic characteristics, the server may not be used to its full capacity at any point. With virtualization, each application runs in its own self-contained VM environment. Thus, by adding VMs until each server’s resources are maximally utilized, an enterprise can reduce the number of servers needed. This is both a CAPEX and OPEX savings.

Cloud providers take this picture one step further, by creating and running data centers with massive server farms on a global scale. They rent computing as a utility by creating VM instances for each customer, spinning up or taking down such instances as the customer requires. This is how cloud providers serve elasticity of demand for millions of users. Cloud service customers can run anything they wish in their VMs, both legacy applications as well as cloud-native ones. This type of cloud deployment is called Infrastructure-as-a-Service, which we described in a previous post together with its companion offerings.

Containers

While VMs on clouds provide an easy way to scale computing power on demand, a customer has to predict how much might be needed, as it takes a certain amount of time to spin up a VM. Waiting until the demand spikes may result in lost revenue from unserved requests, while purchasing too much in advance leads to wasted capacity and higher non-revenue producing costs – exactly the situation that a cloud solution is expected to mitigate.

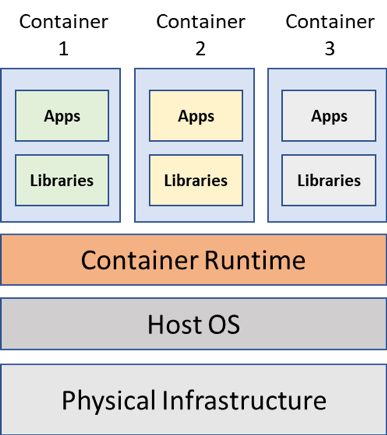

Containers are a light-weight solution which preserves all the goodness of VMs – isolation of users and applications in their own silos in a shared hardware platform – while increasing performance and improving server resource utilization for varying loads. As the figure below shows, a container contains only an application and any associated software – such as libraries – it needs to run, but does not have any OS. It uses the OS of the underlying host for those functions. By losing the OS, a container is much smaller in size and it is quicker for an instance to be created.

All containers on a server use the underlying OS of the host server. A container runtime (instead of a hypervisor) maintains the isolation of each container’s processes from those of others, while sharing the OS’s runtime. Thus, it is sometimes said that containerization virtualizes the OS, while virtualization virtualize the hardware. Operating Systems such as Linux come with container runtime support built into the kernel.

Given the size reduction of containers, thousands of these self-contained bits of code can run on a server. A bonus that emerges from this technology is that a container is portable from one server to another supporting that same OS. Thus, a developer can create and test a containerized application on a laptop and then, if everything checks it out, move it to a cloud sever. If the application is suddenly successful, more instances can be spun up very quickly – and in different regions or even different clouds, if needed, with minimal integration.

Containerization’s popularity fits with another trend in software development, which we described in an earlier blog post – the move to microservices. Microservices allows complex applications to be broken up into smaller, modular units which do specific functions that can be reused in many contexts. Containers form the ideal deployment unit for a microservice. As a self-contained piece of code, the microservice can be deployed within a container and efficiently scaled by the cloud.

Yet another piece of software in needed for the efficient use of containers – an orchestration engine – which manages the deployment and movement of containers across servers. The leading example of that, Kubernetes, will be the subject of a future post.